19 June 2024

As the DiSSCo Transition Project (DTP) is in full swing, the DiSSCo Development team will be giving regularly (bi-monthly) updates on the progress. This progress will mainly focus on Task 3.1 – Further develop the piloted Digital Specimen Architecture (DSarch) into a Minimum Valuable Product (MVP). However, as the development team is also involved on other tasks within DTP, as well as other projects such as TETTRIs, we will also include updates on our work there.

By Sam Leeflang (DiSSCo lead developer)

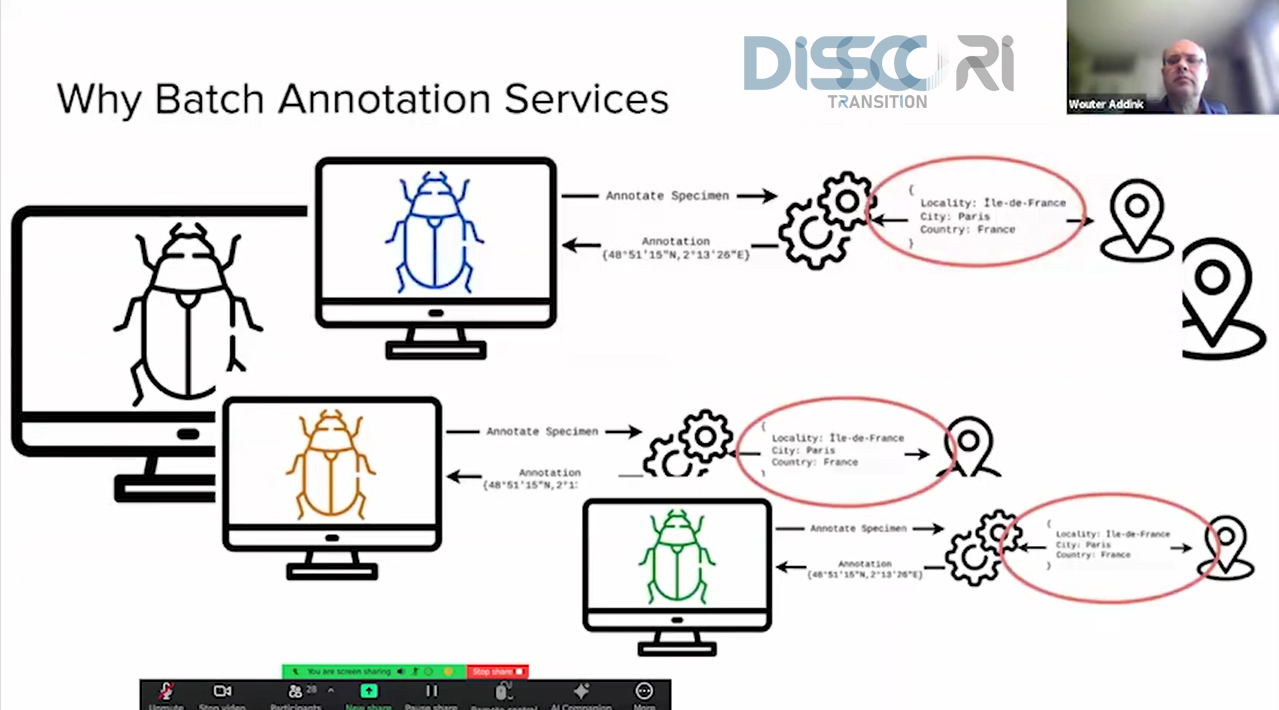

This third demonstration of the progress of Task 3.1 focusses on two main topics. The first topic is batch annotation. In a pre-recorded demo, Soulaine Theocharides explains why batch annotation is vital to the success of DiSSCo. Time is valuable, whether the agent is a human or a machine. We don’t want to have any unnecessary double work. So if we can identify why one specimen was annotated, we could track all similar specimen which we can then also annotate. Developing batch annotation has posed many challenges, but in this demo we show that we are close to solving these and getting this functionality integrated in DiSSCo.

In the second part of the demo, we went over some data model changes. One of the most important parts to get right before launching the MVP is getting the data models right. The past months we made another step in getting all the models ready. We resolved open comments we had, added additional prefixes and reused more terms from existing vocabularies. The structure of schema.dissco.tech changed slightly as we moved the version number back one spot. To document the data models, we decided to reuse the work done by Ben Norton. This means we launched a new website dev.terms.dissco.tech in which we publish a human-readable version of our schemas, completely generated by the JSON schemas.

We concluded the demonstrations with 15 minutes of questions. We would like to thank all participants and hope to see you at our next demo on the 28th of August between 11:00–12:00.

The following topics were presented in the demo:

— Batch annotation for Machine Annotation Service

— New structure for schemas.dissco.tech online

— Restructuring of openDS datamodels and new versions

— openDS Terms documentation in progress (dev.terms.dissco.tech)

— Updated MIDS calculation (based on the SSSOM mapping by Mathias Dillen)

— Test run with DataCite, minted 1491 test DOIs

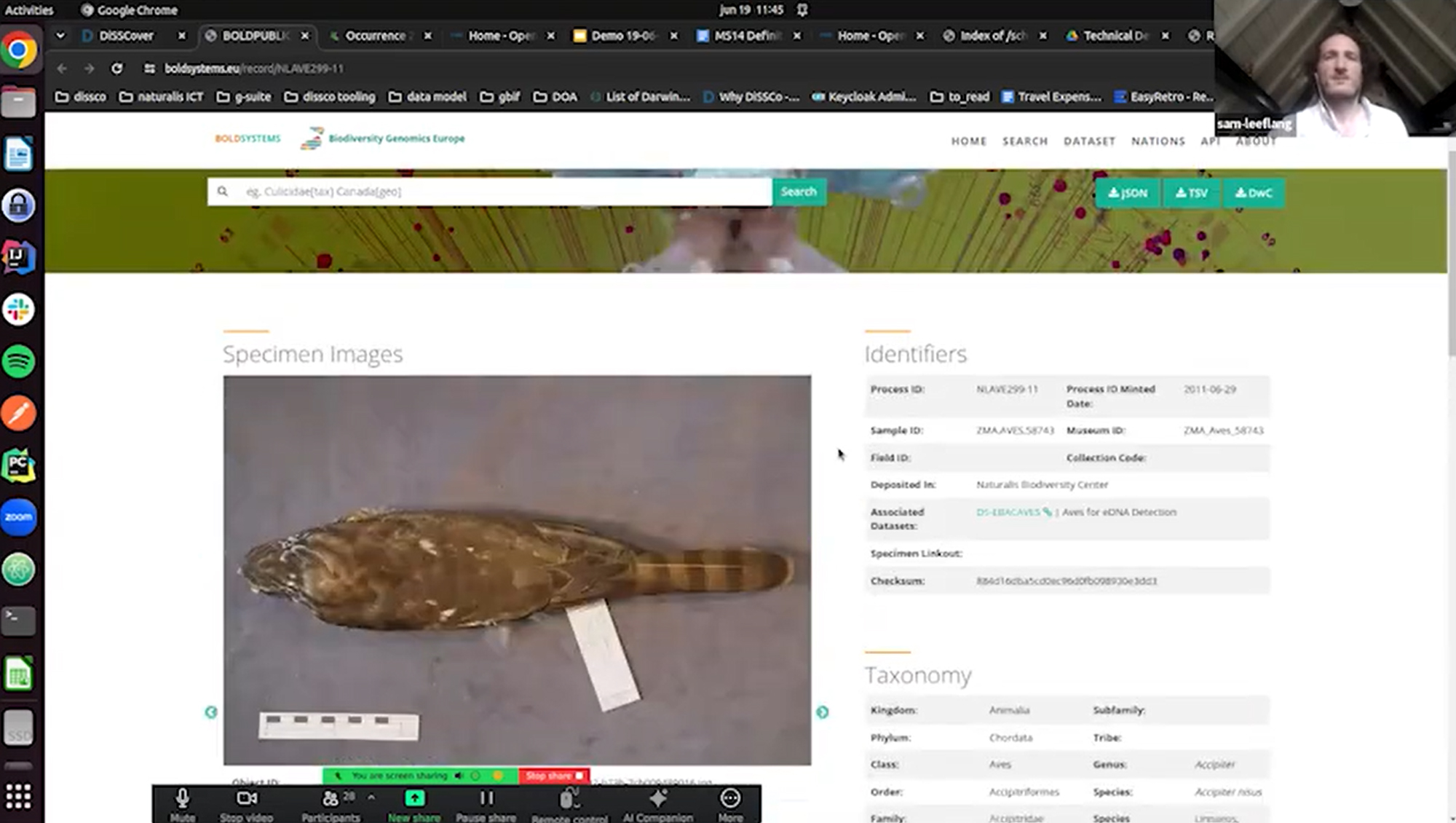

— (Draft) Linking service with BOLD EU

— End-user testing DiSSCover

— Improvements in frontend code DiSSCover

— In draft

— RFC for vocabulary server

— Authorization matrix

— Infrastructural upgrades

— DOI/Handle servers infrastructure-as-code

— Project outputs

— MS14 Definition of a TRL6 Minimum Viable Product of core infrastructure

— Updated services landscape overview

— TETTRIs

— Marketplace – First implementation backend based on Cordra

Looking forward to our next demo, which will be held next August 28th, we hope to show the following topics:

— openDS Terms documentation and datamodel 1.0

— Implement data model changes through infrastructure

— Frontend code improvement

— Documentation for supplying data/metadata

— Improved Handle storage

— Move to Observability stack Naturalis for monitoring/logging/auditing

— Services uptime monitoring page

— Improved MAS support (support for encrypted secrets on deployment)

— Tombstoning specimen records

— Workshop and further implementation TETTRIs marketplace

— Plan for a Vocabulary Server

— Improved logging/registration and integration with ORCID

Zoom link to demo session (August 28th, 11:00h-12:00h CEST)

Do you want to know more about the technical side of DiSSCo? DiSSCo puts different technical knowledge platforms at the scientific community’s disposal:

DiSSCoTech: Get the latest technical posts about the design of DiSSCo’s Infrastructure

DiSSCo Labs: A preview of experimental services and demonstrators by the DiSSCo community

DiSSCo GitHub: Code hosting for DiSSCo software, version control and collaboration

DiSSCo Modelling Framework: A WikiBase tool that is configured to create an abstraction of the DiSSCo data model